Fault tolerance is crucial in a microservices architecture to ensure that the system remains available and resilient, even when individual services fail or experience performance issues. In a Spring-based microservices environment, there are several strategies and tools you can use to achieve and improve fault tolerance. Below are some key techniques, along with examples, to enhance the fault tolerance of microservices.

1. Circuit Breaker Pattern

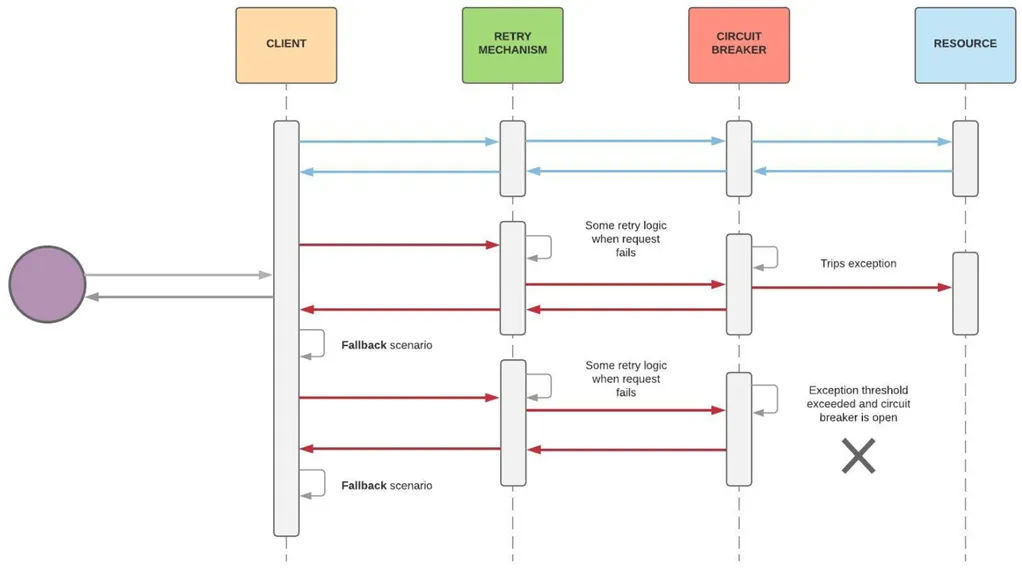

The Circuit Breaker pattern is one of the most popular fault-tolerance techniques. It prevents cascading failures by temporarily blocking calls to a failing service and providing fallback responses.

Example with Resilience4j (Circuit Breaker):

Resilience4j is a lightweight library that provides fault tolerance mechanisms, including Circuit Breaker, Rate Limiter, Retry, and Bulkhead patterns.

Step 1: Add Dependency

First, include the Resilience4j dependency in your pom.xml (for Maven projects):

<dependency>

<groupId>io.github.resilience4j</groupId>

<artifactId>resilience4j-spring-boot2</artifactId>

<version>1.7.1</version>

</dependency>Step 2: Configure Circuit Breaker

Configure the Circuit Breaker in the application.yml or application.properties file:

resilience4j.circuitbreaker.instances.myServiceCircuitBreaker:

registerHealthIndicator: true

slidingWindowSize: 10

minimumNumberOfCalls: 5

failureRateThreshold: 50

waitDurationInOpenState: 10000 # Time in milliseconds

permittedNumberOfCallsInHalfOpenState: 3Step 3: Apply Circuit Breaker to Service

Annotate the method where you want to apply the Circuit Breaker with @CircuitBreaker. If the service fails repeatedly, the Circuit Breaker will open and fallback to a default method.

import io.github.resilience4j.circuitbreaker.annotation.CircuitBreaker;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RestController;

@RestController

public class MyServiceController {

@CircuitBreaker(name = "myServiceCircuitBreaker", fallbackMethod = "fallbackResponse")

@GetMapping("/process")

public String processRequest() {

// Code to call another service, which might fail

// e.g., RestTemplate call to another microservice

return "Success response from service";

}

public String fallbackResponse(Exception e) {

return "Fallback response: service is currently unavailable.";

}

}

import io.github.resilience4j.circuitbreaker.annotation.CircuitBreaker;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RestController;

@RestController

public class MyServiceController {

@CircuitBreaker(name = "myServiceCircuitBreaker", fallbackMethod = "fallbackResponse")

@GetMapping("/process")

public String processRequest() {

// Code to call another service, which might fail

// e.g., RestTemplate call to another microservice

return "Success response from service";

}

public String fallbackResponse(Exception e) {

return "Fallback response: service is currently unavailable.";

}

}2. Retry Mechanism

Retry is a simple yet effective fault-tolerance strategy that automatically retries a failed operation a specified number of times before failing. This can be useful for transient failures like network timeouts.

Example with Resilience4j (Retry):

Step 1: Configure Retry

Add the retry configuration in the application.yml file:

resilience4j.retry.instances.myServiceRetry:

maxAttempts: 3

waitDuration: 2000msStep 2: Apply Retry to Service Method

Use the @Retry annotation on the method where you want to implement the retry logic.

import io.github.resilience4j.retry.annotation.Retry;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RestController;

@RestController

public class MyRetryController {

@Retry(name = "myServiceRetry", fallbackMethod = "retryFallbackResponse")

@GetMapping("/retryProcess")

public String retryProcessRequest() {

// Code to call another service, which might fail

return "Success response from service after retry";

}

public String retryFallbackResponse(Exception e) {

return "Fallback response after retry: service is currently unavailable.";

}

}3. Bulkhead Pattern

The Bulkhead pattern limits the number of concurrent calls to a service or resource, isolating failures and preventing them from impacting other parts of the system. This can be implemented using a thread pool or semaphore.

Example with Resilience4j (Bulkhead):

Step 1: Configure Bulkhead

Add the Bulkhead configuration to your application.yml file:

resilience4j.bulkhead.instances.myServiceBulkhead:

maxConcurrentCalls: 5

maxWaitDuration: 2000msStep 2: Apply Bulkhead to Service Method

Use the @Bulkhead annotation on the method to limit the number of concurrent calls.

import io.github.resilience4j.bulkhead.annotation.Bulkhead;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RestController;

@RestController

public class MyBulkheadController {

@Bulkhead(name = "myServiceBulkhead", fallbackMethod = "bulkheadFallbackResponse")

@GetMapping("/bulkheadProcess")

public String bulkheadProcessRequest() {

// Code to call another service, which might have high traffic

return "Response from service with Bulkhead pattern";

}

public String bulkheadFallbackResponse(Exception e) {

return "Fallback response: service is overloaded.";

}

}4. Timeouts

Setting timeouts for external service calls helps prevent the system from waiting indefinitely for a response. This can be configured in RestTemplate, WebClient, or other HTTP clients used to call services.

Example with RestTemplate:

import org.springframework.boot.web.client.RestTemplateBuilder;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.web.client.RestTemplate;

import java.time.Duration;

@Configuration

public class RestTemplateConfig {

@Bean

public RestTemplate restTemplate(RestTemplateBuilder builder) {

return builder

.setConnectTimeout(Duration.ofMillis(5000))

.setReadTimeout(Duration.ofMillis(5000))

.build();

}

}5. Fallback Methods

Fallback methods provide a default response or alternative action when a service call fails. This can prevent the failure from propagating and allows the system to degrade gracefully.

Example with Hystrix (Spring Cloud Netflix):

While Spring Cloud Netflix Hystrix has been deprecated in favor of Resilience4j, it’s still worth mentioning for historical context. A fallback method is defined similarly to Resilience4j:

import com.netflix.hystrix.contrib.javanica.annotation.HystrixCommand;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RestController;

@RestController

public class MyHystrixController {

@HystrixCommand(fallbackMethod = "fallbackResponse")

@GetMapping("/hystrixProcess")

public String hystrixProcessRequest() {

// Code to call another service, which might fail

return "Success response from service";

}

public String fallbackResponse() {

return "Fallback response: service is currently unavailable.";

}

}6. Health Checks

Implementing health checks for each microservice allows the system to detect and respond to failures quickly. Spring Boot Actuator provides built-in support for health checks.

Example:

Add Spring Boot Actuator dependency in your pom.xml:

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>Enable the health endpoint in the application.yml file:

yaml:

management:

endpoints:

web:

exposure:

include: healthThe health endpoint can now be accessed at /actuator/health to monitor the health of the service.

7. Service Discovery and Load Balancing

Using service discovery tools like Eureka and load balancers like Ribbon (deprecated in favor of Spring Cloud LoadBalancer) helps distribute traffic and route around failed instances.

Example with Spring Cloud LoadBalancer:

Configure a load balancer in a microservice to distribute traffic among instances:

import org.springframework.cloud.client.loadbalancer.LoadBalanced;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.web.client.RestTemplate;

@Configuration

public class LoadBalancerConfig {

@Bean

@LoadBalanced

public RestTemplate restTemplate() {

return new RestTemplate();

}

}8. Message Queues

Using message queues like RabbitMQ, Kafka, or AWS SQS decouples services and improves fault tolerance. Messages can be retried, stored, or redirected to a dead-letter queue if processing fails.

Conclusion

Improving fault tolerance in Spring-based microservices involves a combination of patterns and practices:

- Circuit Breakers to prevent cascading failures.

- Retry Mechanisms to handle transient failures.

- Bulkhead Pattern to isolate failures.

- Timeouts to avoid indefinite waits.

- Fallback Methods for graceful degradation.

- Health Checks for monitoring service status.

- Service Discovery and Load Balancing for distributing traffic.

- Message Queues for decoupling services.

By implementing these strategies, you can significantly enhance the resilience and robustness of your microservices architecture, ensuring that the system continues to function even when individual components fail or become temporarily unavailable.

Post a Comment